When crafting surveys or running usability tests, one rule sits near the top: do not use leading questions.

The classics:

“How easy was it to use our new checkout?”

“How would you describe your experience with checkout?”

The first quietly plants an idea. The second allows the user to bring their own reality.

The same thing happens with AI.

When you ask, “Why is feature X so good?”, you are not really asking for analysis. You are priming the system with the conclusion you want. From there, it does exactly what it is designed to do: support the direction you have given it.

Change the framing and the outcome changes.

A simple example:

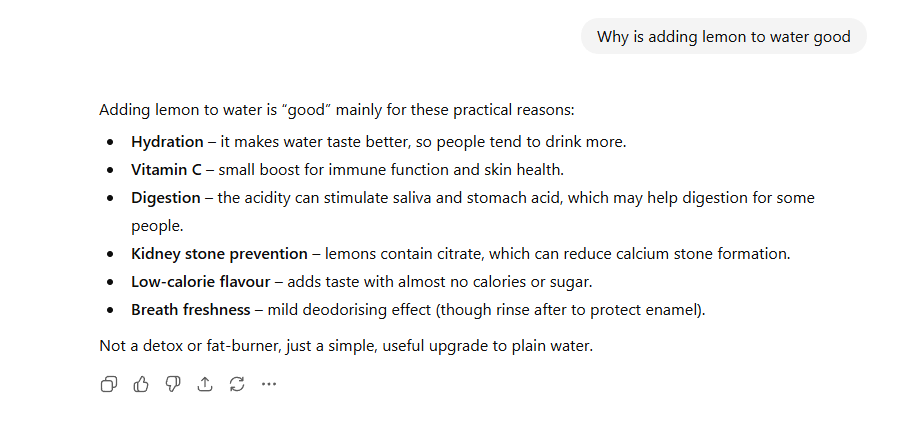

Ask, “Why is it good to add lemon to water?” and you will get a neat list of benefits such as antioxidants, hydration, digestion, and kidney stone prevention.

Ask, “Why is it bad to add lemon to water?” and suddenly the answer is about tooth enamel erosion, acid reflux, and sensitivity.

Ask instead, “What happens when you add lemon to water?” and you get both sides: the benefits, the drawbacks, and the recommendation to use it in moderation. The topic has not changed. Only the framing has.

AI is not being deceptive. It is being obedient. The problem is that obedience can easily look like accuracy.

Leading Questions Create Pre-Filtered Answers

With AI, a leading question does not just bias the tone. It filters the information space. You decide in advance what matters, what is ignored, and what “good” or “bad” even means. Instead of discovering something new, you receive a polished explanation of the story you already had in mind.

It feels productive.

It feels intelligent.

It feels fast.

It is still an echo.

Why This Matters for Product Decisions

Teams increasingly use AI to synthesise research, generate insights, write problem statements, shape roadmaps, and justify design direction.

“If those outputs are built on leading prompts, you end up stacking assumptions on top of assumptions.”This is how teams arrive at confident recommendations built on thin evidence, features that solve imaginary problems, and “user needs” that sound convincing in presentations but collapse in reality. The cost is not theoretical. It shows up as rework.

A simple rule when you’re leveraging LLMs for research. If your question contains the answer, rewrite it. Same as when you’re crafting surveys.

Instead of:

“Why do users like…”

“What makes this better…”

“Why is this important…”

Try:

“How do users experience…”

“What are the trade-offs…”

“What changes when…”

The Payoff: Actual Insight

Neutral prompts surface contradictions, edge cases, uncomfortable truths, trade-offs, and things you did not expect to hear. That is what research is meant to uncover.

AI becomes a thinking partner rather than a validation machine. You stop designing for the story you prefer and start designing for the reality you have.

Less comfortable. Far more useful.